Trustworthy AI: Strategic, Responsible, Safe, Reliable, Scalable

2025 is the year of Trustworthy AI as organizations worldwide deploy AI-enthused solutions with limited consideration on how to make it safe. Not only from a compliance and risk management perspective, but by creating AI that users themselves can trust. Trustworthy AI is top of mind for many people, though there is currently no standard approach to articulating what this means. Can we trust the results? Can we trust that AI is safely used? Is AI going to take our jobs? Will AI grow a personality and conquer the world by turning a society of toasters against us? To truly address this, it must be recognised that Trustworthy AI is more than red teaming, or testing against responsible pillars of AI. It’s about creating a trustworthy experience grounded in good data, exposing cumulative human error and AI hallucinations through technical monitoring and human observability. It’s about building a digital ecosystem that is strategic, responsible, safe, reliable and scalable. It’s about making AI a trusted enabler for humans.

Trust in AI: Reducing Fears with a Strategic Vision

It is hugely important that there is trust in AI org-wide, for the successful adoption of AI. This needs human involvement and intentionality as part of a long-term AI strategy and clearly defined enablement program. Podcast listener, Rachel Roberts, comments that the need for a clear vision and planning a path towards the benefits of AI, instead of letting organic doubt take over, will build trust and reduce fears of uncertainty. She believes that being intentional can help mitigate doubts and foster trust in AI and each other. Having a strategic vision is critical and must be collectively agreed upon and championed. Ensuring that change management and training are fundamental to the effort is also essential due to the complexities of technology implementation and adoption. By combining these approaches, users can adapt, and organizations can maximize the impact of AI within their business.

Ethical Agents and the Path to Digital Equity

European regulations are becoming more stringent, aiming to ensure AI safety, ethics, and transparency. It's therefore crucial to ground AI solutions in good data and adhere to responsible AI (RAI) standards to avoid significant fines for non-compliance. The impact of robotics on the labor market are bringing opportunities, creating task automation through rules-based agents, and evolving fully autonomous agents ready for a shift towards human oversight of agent activities. Embracing an abundance mindset can unlock these new opportunities in the evolving landscape, though there’s a necessity for digital equity, ethics and preparing data systems for future AI integration.

Executive Vision and Actionable Roadmap for your AI Strategy

There’s an incredibly important transition in the broad information technology space that is often lost in the furor and excitement over generative AI. Simply “wanting AI” doesn’t cut it. So, the AI Strategy Framework begins with the Strategy and Vision pillar that sets forth five dimensions beginning with vision, extending to creating the actionable roadmap and architecture necessary to actualize that vision, and finally establishing the programmatic elements necessary to drive that vision to fruition. These dimensions help organizations formulate and take action on their big ideas.

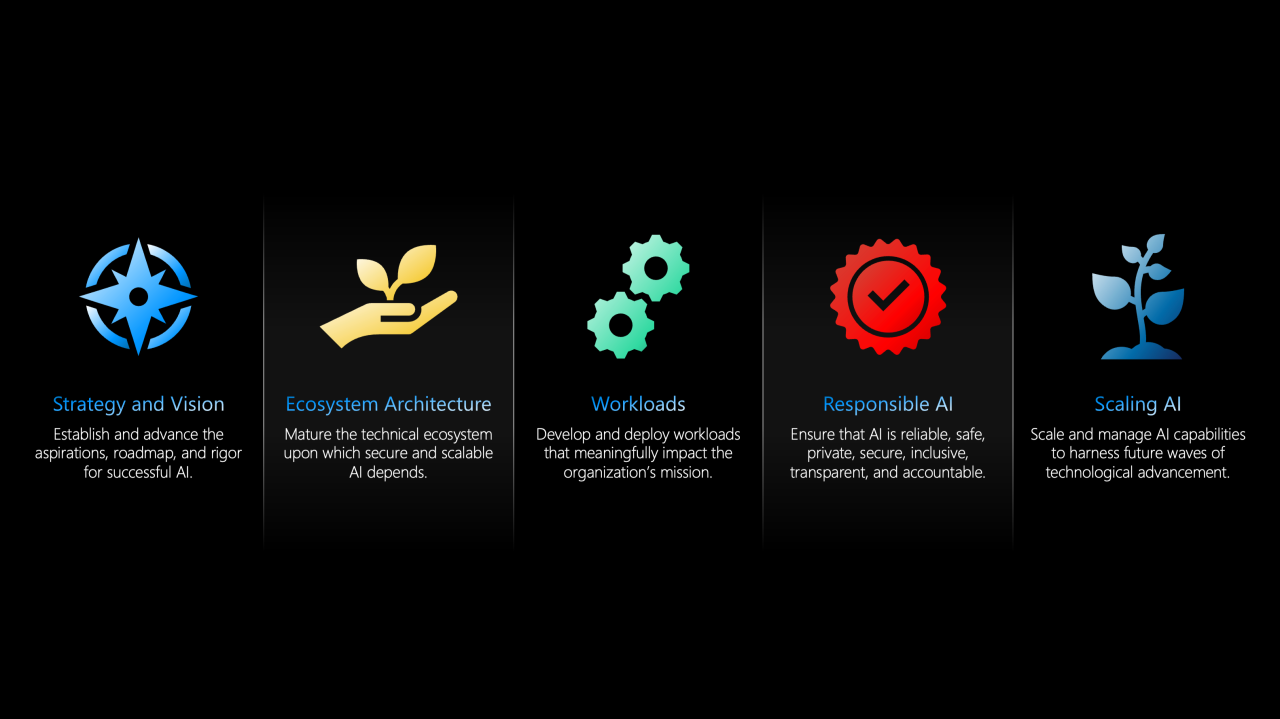

"AI Strategy Framework" guides your organization's journey in the Age of AI

We’ve learned a great deal about maturity and readiness for - and responsibility to the ethics of - AI over the past year, as well. It’s now time for a proper model through which organizations may realistically assess their readiness to adopt and scale artificial intelligence, and then identify specific areas to invest time, talent, and funding along their journey. This AI Strategy Framework guides organizations as they construct their AI strategy atop five pillars, each with five dimensions to be considered, matured, and regularly evaluated.

Copilot positioned to become the new UI of AI

With organizations today using 200-300 applications, what will be the impact on users when a profusion of AI solutions are added to the pile? As vendor AI offerings continue to expand, imagine the confusion. Employees required to access multiple agents with multiple UIs, stored sporadically across their organization: an agent for HR relations, sales, service, and for vendor applications, the likes of Workday, SAP, Salesforce. A labyrinth of applications. Now imagine this simplified. Imagine all the isolated agents and their data integrated into a single UI, a single place of reference, giving clarity, accessibility and enabling high adoption. As announced by Satya Nadella, Copilot is positioning to become the new UI for AI.

Bot vs human: which will reign in consumer engagement?

In the dawn of ‘agentic’ AI, that is to say, autonomous bots capable of mimicking humans and independent decision-making, what will be the implications for our every lives? Perhaps an end to dreaded call centre dispute resolutions, instead replaced by bots tackling negotiations perfectly due to having instant access to undisputable contracts and policies, outmatching human agents. For e-commerce, AI assistants capable of re-ordering groceries online, exploiting the best discounts, fastest delivery, and lowest shipping costs, totally disrupting traditional e-commerce loyalty. Future AI has the potential to make daily life incredibly efficient and transform consumer engagement models in ways not yet fully realised.

The skeptical approach to security and AI

Staggeringly, if cybercrime were a country, it would have the 3rd largest GDP. With attacks happening every second, it’s never been more important to approach data security and AI with a zero-trust mindset: practicing insider risk-management, auto-classifying data with Purview, and “red teaming” AI outputs. This critical thinking should apply to future advancements also, as we predict a shift towards observability whereby AI handles tasks and humans merely monitor them. Plus, as AI begins to mimic personas and styles, the risk of deep fakes increases, unbeknownst to users unless questioned. Staggeringly, if cybercrime were a country, it would have the 3rd largest GDP. With attacks happening every second, it’s never been more important to approach data security and AI with a zero-trust mindset: practicing insider risk-management, auto-classifying data with Purview, and “red teaming” AI outputs. This critical thinking should apply to future advancements also, as we predict a shift towards observability whereby AI handles tasks and humans merely monitor them. Plus, as AI begins to mimic personas and styles, the risk of deep fakes increases, unbeknownst to users unless questioned.

Embracing responsible AI with chaos engineering and governance

As AI systems become more integrated into our daily lives, it’s never been more critical to ensure they operate ethically. There are significant risks if not governed properly through informed practices, making responsible app development not just a necessity, but a cornerstone for building trustworthy AI systems that adhere to ethical standards and regulatory requirements. When an organization upholds this commitment, it not only mitigates potential harms but also fosters trust among users and stakeholders, thereby establishing the foundations for long-term success.

Whitepaper: “Crafting your Future-Ready Enterprise AI Strategy, e2”

It has become obvious how difficult so many organizations are finding it to actually craft and execute their AI strategy, in part because of the (often) decades they’ve spent kicking their proverbial data can down the road, in part because it turns out that enterprise-grade AI really does require the adoption of ecosystem-oriented architecture to truly scale, but largely because many organizations have no idea where to start. Many lack the wherewithal to really assess where they stand on day one, and to identify areas where they must mature to get to day 100 (and beyond).

We’ve learned a great deal about maturity and readiness for - and responsibility to the ethics of - AI over the past year, as well. It’s now time to broaden the thesis, so in this second edition we offer a model through which organizations may realistically assess their current maturity to adopt and scale artificial intelligence, and then identify specific areas to invest time, talent, and funding along their journey.