Building future-ready cloud ecosystems for the age of AI

I’m often asked, lately, “What should our AI strategy look like?”. So I am planning a lengthier whitepaper to expand on the topics that I’ll introduce below.

I mused in my piece earlier this year, Strategic thinking for the Microsoft Cloud, that “I really wonder how many will miss out on the AI wave because they lack the wisdom or the willpower to make the most of it.”

Then, two weeks ago under the headline Your employer is (probably) unprepared for artificial intelligence, The Economist suggested that divergence in technology investment—and the success of that investment across firms—has resulted in a “two-tier economy” wherein “firms that embrace tech are pulling away from the competition”. The piece cited several examples from around the world, including a nearly 11% rise in average worker productivity in Britain’s most productive firms from 2010 to 2019, a period where the least productive firms saw no rise.

Discussing AI in recent months I have often thought about the fable of the boiled frog, whereby a frog placed in boiling water jumps out, but a frog placed in warm water that is gradually heated lacks awareness of his impending demise until it is too late. Or, as I continue to remind the CIOs with whom I work closely, the grace period for organizations to get their act together and position themselves for the next wave is growing much shorter, the margin for error much more narrow.

Two foundational realities

So it is that I’ve been spending significant time and mental energy thinking about a proper “AI strategy” for organizations that wish to escape the sad fate of the frog, or that of the world’s organizations being left behind by the pace of technological change. I am encountering two foundational realities here time and again.

You are (probably) not ready; almost nobody is

First, very few—if any—organizations are truly prepared to make the most of the AI wave crashing on their shore. Very few have done the hard work to build the kind of proper, modern data platform required to make AI work at scale across their organization. For this reason, nearly everyone’s “AI strategy” will necessarily look very much like most everyone else’s AI strategy, at least in the early days as organizations across the economy and around the world scurry to get their house in order.

Future-ready, not future-proof

Second, nobody truly knows exactly what a mature AI capability will look like or exactly how this will play out in practice. Which is to say that any AI strategy must be flexible, able to absorb tomorrow what we don’t fully grasp today. Your strategy should also offer value to the organization beyond specific AI-driven workloads because the nature and value of these workloads will remain unclear for some time. This explains my fondness for the phrase future-ready, and why I cringe when I hear people say “future-proof”. The former describes a cloud ecosystem built with modern technologies using best practices that are most likely to absorb whatever future innovations come our way. The latter is unachievable in all circumstances.

Core concept behind AI acting on enterprise data

The most basic concept behind institutional AI: Enterprise data (raw knowledge) is stored such that it can be (a) indexed and (b) accessed by AI. Capabilities such as Azure AI services act on that knowledge to produce a response.

I’ve adapted and updated the model shown here from Pablo Castro’s great piece, Revolutionize your Enterprise Data with ChatGPT: Next-gen Apps w/ Azure OpenAI and Cognitive Search (March 2023), on Microsoft’s Azure AI services Blog.

In the top-right of the diagram we’re looking at various data sources sitting in a modern data platform (Azure SQL, OneLake, and Blob Storage are shown top to bottom for representative purposes). These data sources are indexed by Azure Cognitive Search, which also provides an enterprise-wide single search capability. Moving to the far left we see an application user experience (UX)—e.g., a mobile, tablet, or web app—that, of course, provides an end user the ability to interact with our particular workload. The application sitting beneath the UX queries the knowledge contained in Cognitive Search’s index (as derived from the data sources), and passes that prompt + knowledge to Azure AI services to generate an appropriate response to be fed back to the user.

Pillars for your AI strategy

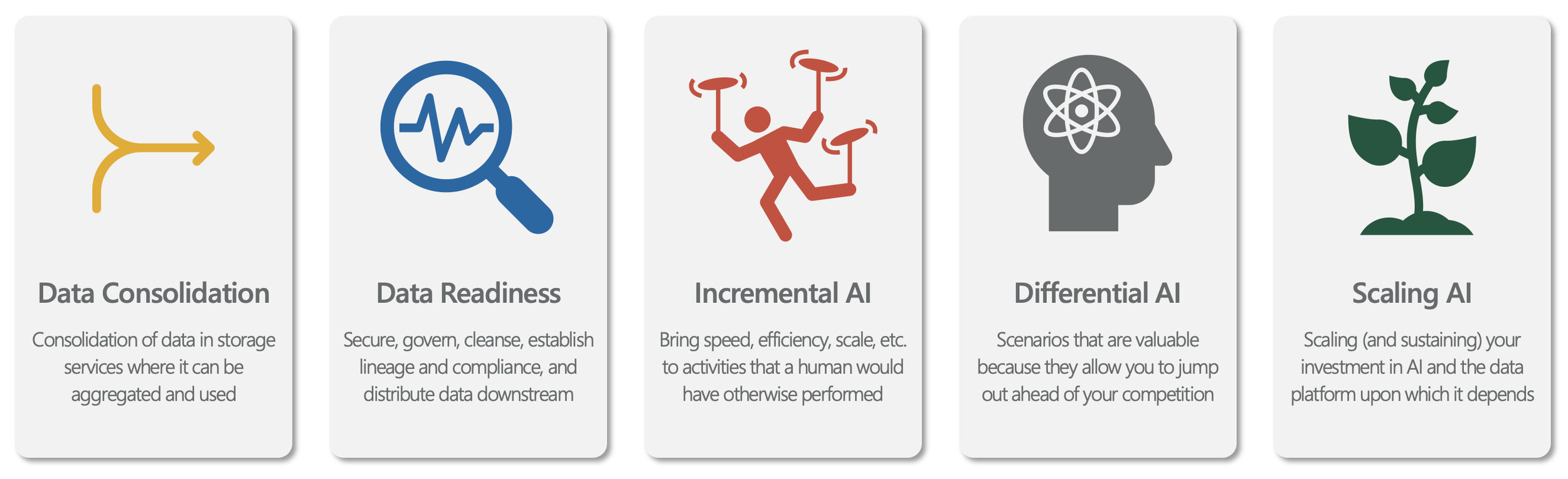

With that concept established, we’ll now turn our attention to what I have come to think of as the five pillars of your AI strategy. These are the pillars atop which I am guiding CIOs and other IT decision makers to formulate their strategies.

Data Consolidation

Pre-requisite to your use of AI is consolidation of data in storage services where it can be aggregated and used. Data is the essential fuel without which AI models cannot be trained nor have the capacity to act on the information that makes them most valuable. Unfortunately, for all the advancements in cloud technology of the last decade, most organizations are home to vast unconsolidated stores of data.

A notional architecture for data consolidation in practice working with the AI model we discussed earlier.

Returning to our earlier model, most organizations are likely to land on a data consolidation architecture that looks something like the diagram shown here. Wherein we see data migrated (dotted line) out of, for example, Access databases and Excel files into Dataverse, out of on-premise SQL into Azure SQL, or out of network storage into Azure Blob. You’ll then find yourself with a fairly sizable transactional data estate underpinning most of your applications whose data flows downstream to services such as OneLake. For example, data from Azure SQL is pushed or shortcutted into the lake.

But though we possess increasingly sophisticated technical capabilities to make data consolidation a reality, successfully executing on this pillar of your AI strategy requires the organizational will to do so. Grass roots action within a typical organization is insufficient and likely to result in further, randomized diffusion of data across the estate. Nor is point-solution oriented architecture, which tends to neglect data consolidation in favor of data storage in application-specific data services. Data consolidation is, for this reason, one of the most important executive-led priorities in technology today as IT shops the world over race to build future-ready ecosystems.

Data Readiness

Here we are referring to measures taken to secure, govern, cleanse, establish lineage and compliance, manage metadata, and—ultimately—distribute data to downstream consumers, including AI-driven workloads. In other words, have you established the conditions for your consolidated data to be used, and for its use thereof to produce quality outcomes?

There are, broadly speaking, three major work streams to be pursued here.

The first pertains to what I’ll call “data hygiene”, that is to say, security, governance, quality, lineage, compliance etc. Microsoft is investing heavily in its Purview capability to provide these solutions across the data estate, so I recommend that implementation of Purview be an early stage milestone in nearly every organization’s AI strategy, and that your Purview implementation be matured and kept current with the product’s latest capabilities over time.

The second of our Data Readiness work streams consists of good, old fashioned best practices around building and maturing your cloud landing zone and the ongoing maturation of your cloud estate. The essential task here is to mature your cloud infrastructure in accordance with industry best practices, with an emphasis on data security and governance.

Our third work stream here concerns core data distribution services generally and indexing specifically. Azure Cognitive Search is the linchpin here as it is increasingly becoming one of the principal “front doors” through which AI walks in order to use enterprise data. Its implementation ought to rank alongside Purview and Data Lake / OneLake in terms of specific data platform services required by an organization seeking to become future-ready in the age of AI.

Incremental AI

What I will call “Incremental AI” is a broad, conceptual category of workloads in which AI is applied to bring speed, efficiency, scale, accuracy, quality, etc. to activities that a human would have otherwise performed.

Microsoft’s Co-Pilot capabilities (for the most part) squarely fall into this bucket as they help their end user to find information more granularly, identify highest-potential sales targets more accurately, create content more quickly, book appointments more efficiently, write code more effectively, etc. These use cases tend to share two things in common:

They were already being performed by a human, and would have gone on being performed by a human with or without AI;

They are often performed in support of the organization’s overall purpose, not as the organization’s primary function.

The central question to ask yourself, your colleagues, and those formulating your AI strategy is, “Which activities currently being performed by humans could we make better in ABC ways by turning some or all of the task over to AI?” These answers will often come easily, but it’s worthy to cast a wide net to ensure you’re maximizing the potential of AI in your incremental workloads. Consider:

Implementing those of Microsoft’s extensive (and growing) range of “Co-Pilot” products that are likely to be most impactful to your organization;

Directly asking end users and line of business owners to identify pain points in their work and their wishlist for AI-enabled assistance;

Considering AI capabilities as part of any app rationalization or workload prioritization exercise concerning your application portfolio;

Using tools like process mining in Power Automate to identify pain points in business processes that AI (or automation) could perhaps make less painful;

Keeping your ear to the ground with competitors, “friendlies”, and industry groups as to how AI is making incremental improvements across your sector… as is so often the case with technology, incremental AI does not necessarily require you to have the best, but it does require you maintain parity with your competition.

This is ultimately a workload prioritization and roadmapping activity, so I recommend having a look at my “One Thousand Workloads” piece from several years ago (which addressed roadmapping for Power Platform but is quite applicable here, too).

Differential AI

Whereas Incremental AI includes the scenarios that improve upon solo human performance of activities that would have been performed anyway, “Differential AI” broadly encapsulates workloads that would not have likely been performed by humans alone, scenarios that are valuable to the organization because they allow you to jump out ahead of your competition.

Hallmarks of these differentiating, accelerative workloads are that they require a degree of creative thinking to dream up, can be challenging to implement, often involve deriving insights by mixing data that you already own but never had the ability to co-mingle, operate along a time dimension—that is to say, involve some sort of computation or connection that must be completed within a window of time that makes human intervention more challenging—and will require a degree of flexibility on your part at least in the early days as you figure out exactly how to harness the power of this newfangled thing you’ve built.

AlphaFold, developed by Alphabet’s DeepMind lab to make predictions about protein structure, is one of the world’s more successful examples of this type of differential AI. But differential AI need not offer groundbreaking promise for the future of humanity in order to be useful to one organization, team, or even to an individual’s world of work.

For example, a law firm, accountancy, or consultancy might use AI to produce regular guidance advising its clients of upcoming regulatory or legal changes that may impact their business in the various jurisdictions in which they operate. A typical firm would know who its clients are, in which countries and sub-national regions (e.g. states, provinces, territories, counties, autonomies, etc.) its clients operate, and possess data concerning its client’s products or services. This proprietary information could be used in conjunction with (a) publicly available information and (b) internal documentation—indexed by Cognitive Search, of course—concerning regulatory or legal changes taking effect over (say) the next 6-12 months to produce tailored guidance for individual clients.

Your particular differential AI workloads ought to be baked into your AI strategy’s roadmap from the start, and shepherded by technology and business leaders with a healthy tolerance for flexibility.

Scaling AI

In time most organizations will turn their attention from future readiness and establishing themselves with AI to focus instead on scaling (and sustaining) their investment in AI and the data platform upon which it depends. Put another way, one-time consolidation and readiness of data combined with a few AI-driven workloads does not a future-ready organization make.

First, organizations must tune their technical capabilities to support the scaling of AI. Some of this will be directly relevant to AI itself, for example, employing Machine Learning Operations (MLOps) to build, deploy, monitor, and maintain production models, incorporating rapid advances in the tech to your enterprise machine learning platform where you’ll land your workloads to operate them in your daily business.

Then there is the discipline required to maintain a healthy data platform over time, for example by architecting individual applications such that they don’t run off and spawn a new generation of one-off data siloes just as you’ve exorcised the data ghosts of your past, maintaining the index across your estate, etc.

Finally there are non-technical organizational implications. You see, since (IT) time immemorial IT organizations have structured themselves in siloed, technology-specific teams. This organizational model tends to produce a phenomenon that I call the “IT Tower of Babel”, wherein baskets of requirements are given to specific teams built around specific technologies. But AI is a team sport requiring artificial intelligence and machine learning expertise alongside expertise in data science, data platform and integration, infrastructure, security, and application development, as well. Re-building your IT organization to scale innovation by co-mingling different technical expertise throughout the org chart will, I believe, be instrumental in creating a real culture of AI within any organization.

Onwards

As we evolve, though, I expect the foundational principles to remain paramount:

Your AI strategy must be flexible, able to absorb tomorrow what we don’t fully grasp today;

Your strategy should offer value to the organization beyond specific AI-driven workloads.

I also expect that strategies built around our five pillars will be durable and able to evolve nicely over the next several years:

Consolidate your enterprise data into storage services addressable by AI and AI-enablement technologies;

Make Ready your enterprise data in terms of security, governance, lineage, quality, indexing, etc.;

Deploy Incremental AI workloads that make necessary activities more efficient, accurate, cost effective, etc.;

Balance your Differential AI roadmap between moonshots and quick wins;

Scale your AI investment through technical services such as MLOps and low-code, as well as organizational changes.

This is, I believe, the absolutely necessary work to ensuring that your organization does not find itself on the left-behind side of a two-tier economy wherein “firms that embrace tech are pulling away from the competition”.